This article is about a particular family of continuous distributions referred to as the generalized Pareto distribution. For the hierarchy of generalized Pareto distributions, see

Pareto distribution .

In statistics , the generalized Pareto distribution (GPD) is a family of continuous probability distributions . It is often used to model the tails of another distribution. It is specified by three parameters: location

μ

{\displaystyle \mu }

σ

{\displaystyle \sigma }

ξ

{\displaystyle \xi }

[ 2] [ 3] [ 4]

κ

=

−

ξ

{\displaystyle \kappa =-\xi \,}

[ 5]

Generalized Pareto distribution

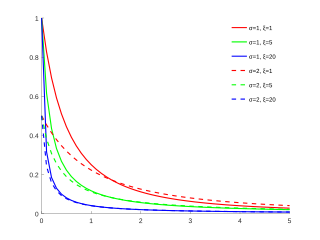

Probability density function

GPD distribution functions for

μ

=

0

{\displaystyle \mu =0}

and different values of

σ

{\displaystyle \sigma }

and

ξ

{\displaystyle \xi }

Cumulative distribution function

Parameters

μ

∈

(

−

∞

,

∞

)

{\displaystyle \mu \in (-\infty ,\infty )\,}

location (real )

σ

∈

(

0

,

∞

)

{\displaystyle \sigma \in (0,\infty )\,}

scale (real)

ξ

∈

(

−

∞

,

∞

)

{\displaystyle \xi \in (-\infty ,\infty )\,}

shape (real) Support

x

⩾

μ

(

ξ

⩾

0

)

{\displaystyle x\geqslant \mu \,\;(\xi \geqslant 0)}

μ

⩽

x

⩽

μ

−

σ

/

ξ

(

ξ

<

0

)

{\displaystyle \mu \leqslant x\leqslant \mu -\sigma /\xi \,\;(\xi <0)}

PDF

1

σ

(

1

+

ξ

z

)

−

(

1

/

ξ

+

1

)

{\displaystyle {\frac {1}{\sigma }}(1+\xi z)^{-(1/\xi +1)}}

where

z

=

x

−

μ

σ

{\displaystyle z={\frac {x-\mu }{\sigma }}}

CDF

1

−

(

1

+

ξ

z

)

−

1

/

ξ

{\displaystyle 1-(1+\xi z)^{-1/\xi }\,}

Mean

μ

+

σ

1

−

ξ

(

ξ

<

1

)

{\displaystyle \mu +{\frac {\sigma }{1-\xi }}\,\;(\xi <1)}

Median

μ

+

σ

(

2

ξ

−

1

)

ξ

{\displaystyle \mu +{\frac {\sigma (2^{\xi }-1)}{\xi }}}

Mode

μ

{\displaystyle \mu }

Variance

σ

2

(

1

−

ξ

)

2

(

1

−

2

ξ

)

(

ξ

<

1

/

2

)

{\displaystyle {\frac {\sigma ^{2}}{(1-\xi )^{2}(1-2\xi )}}\,\;(\xi <1/2)}

Skewness

2

(

1

+

ξ

)

1

−

2

ξ

(

1

−

3

ξ

)

(

ξ

<

1

/

3

)

{\displaystyle {\frac {2(1+\xi ){\sqrt {1-2\xi }}}{(1-3\xi )}}\,\;(\xi <1/3)}

Excess kurtosis

3

(

1

−

2

ξ

)

(

2

ξ

2

+

ξ

+

3

)

(

1

−

3

ξ

)

(

1

−

4

ξ

)

−

3

(

ξ

<

1

/

4

)

{\displaystyle {\frac {3(1-2\xi )(2\xi ^{2}+\xi +3)}{(1-3\xi )(1-4\xi )}}-3\,\;(\xi <1/4)}

Entropy

log

(

σ

)

+

ξ

+

1

{\displaystyle \log(\sigma )+\xi +1}

MGF

e

θ

μ

∑

j

=

0

∞

[

(

θ

σ

)

j

∏

k

=

0

j

(

1

−

k

ξ

)

]

,

(

k

ξ

<

1

)

{\displaystyle e^{\theta \mu }\,\sum _{j=0}^{\infty }\left[{\frac {(\theta \sigma )^{j}}{\prod _{k=0}^{j}(1-k\xi )}}\right],\;(k\xi <1)}

CF

e

i

t

μ

∑

j

=

0

∞

[

(

i

t

σ

)

j

∏

k

=

0

j

(

1

−

k

ξ

)

]

,

(

k

ξ

<

1

)

{\displaystyle e^{it\mu }\,\sum _{j=0}^{\infty }\left[{\frac {(it\sigma )^{j}}{\prod _{k=0}^{j}(1-k\xi )}}\right],\;(k\xi <1)}

Method of moments

ξ

=

1

2

(

1

−

(

E

[

X

]

−

μ

)

2

V

[

X

]

)

{\displaystyle \xi ={\frac {1}{2}}\left(1-{\frac {(E[X]-\mu )^{2}}{V[X]}}\right)}

σ

=

(

E

[

X

]

−

μ

)

(

1

−

ξ

)

{\displaystyle \sigma =(E[X]-\mu )(1-\xi )}

Expected shortfall

{

μ

+

σ

[

(

1

−

p

)

−

ξ

1

−

ξ

+

(

1

−

p

)

−

ξ

−

1

ξ

]

,

ξ

≠

0

μ

+

σ

[

1

−

ln

(

1

−

p

)

]

,

ξ

=

0

{\displaystyle {\begin{cases}\mu +\sigma \left[{\frac {(1-p)^{-\xi }}{1-\xi }}+{\frac {(1-p)^{-\xi }-1}{\xi }}\right]&,\xi \neq 0\\\mu +\sigma [1-\ln(1-p)]&,\xi =0\end{cases}}}

[ 1]

The related location-scale family of distributions is obtained by replacing the argument z by

x

−

μ

σ

{\displaystyle {\frac {x-\mu }{\sigma }}}

The cumulative distribution function of

X

∼

G

P

D

(

μ

,

σ

,

ξ

)

{\displaystyle X\sim GPD(\mu ,\sigma ,\xi )}

μ

∈

R

{\displaystyle \mu \in \mathbb {R} }

σ

>

0

{\displaystyle \sigma >0}

ξ

∈

R

{\displaystyle \xi \in \mathbb {R} }

F

(

μ

,

σ

,

ξ

)

(

x

)

=

{

1

−

(

1

+

ξ

(

x

−

μ

)

σ

)

−

1

/

ξ

for

ξ

≠

0

,

1

−

exp

(

−

x

−

μ

σ

)

for

ξ

=

0

,

{\displaystyle F_{(\mu ,\sigma ,\xi )}(x)={\begin{cases}1-\left(1+{\frac {\xi (x-\mu )}{\sigma }}\right)^{-1/\xi }&{\text{for }}\xi \neq 0,\\1-\exp \left(-{\frac {x-\mu }{\sigma }}\right)&{\text{for }}\xi =0,\end{cases}}}

where the support of

X

{\displaystyle X}

x

⩾

μ

{\displaystyle x\geqslant \mu }

ξ

⩾

0

{\displaystyle \xi \geqslant 0\,}

μ

⩽

x

⩽

μ

−

σ

/

ξ

{\displaystyle \mu \leqslant x\leqslant \mu -\sigma /\xi }

ξ

<

0

{\displaystyle \xi <0}

The probability density function (pdf) of

X

∼

G

P

D

(

μ

,

σ

,

ξ

)

{\displaystyle X\sim GPD(\mu ,\sigma ,\xi )}

f

(

μ

,

σ

,

ξ

)

(

x

)

=

1

σ

(

1

+

ξ

(

x

−

μ

)

σ

)

(

−

1

ξ

−

1

)

{\displaystyle f_{(\mu ,\sigma ,\xi )}(x)={\frac {1}{\sigma }}\left(1+{\frac {\xi (x-\mu )}{\sigma }}\right)^{\left(-{\frac {1}{\xi }}-1\right)}}

again, for

x

⩾

μ

{\displaystyle x\geqslant \mu }

ξ

⩾

0

{\displaystyle \xi \geqslant 0}

μ

⩽

x

⩽

μ

−

σ

/

ξ

{\displaystyle \mu \leqslant x\leqslant \mu -\sigma /\xi }

ξ

<

0

{\displaystyle \xi <0}

The pdf is a solution of the following differential equation : [citation needed

{

f

′

(

x

)

(

−

μ

ξ

+

σ

+

ξ

x

)

+

(

ξ

+

1

)

f

(

x

)

=

0

,

f

(

0

)

=

(

1

−

μ

ξ

σ

)

−

1

ξ

−

1

σ

}

{\displaystyle \left\{{\begin{array}{l}f'(x)(-\mu \xi +\sigma +\xi x)+(\xi +1)f(x)=0,\\f(0)={\frac {\left(1-{\frac {\mu \xi }{\sigma }}\right)^{-{\frac {1}{\xi }}-1}}{\sigma }}\end{array}}\right\}}

If the shape

ξ

{\displaystyle \xi }

μ

{\displaystyle \mu }

exponential distribution .

With shape

ξ

=

−

1

{\displaystyle \xi =-1}

continuous uniform distribution

U

(

0

,

σ

)

{\displaystyle U(0,\sigma )}

[ 7]

With shape

ξ

>

0

{\displaystyle \xi >0}

μ

=

σ

/

ξ

{\displaystyle \mu =\sigma /\xi }

Pareto distribution with scale

x

m

=

σ

/

ξ

{\displaystyle x_{m}=\sigma /\xi }

α

=

1

/

ξ

{\displaystyle \alpha =1/\xi }

If

X

{\displaystyle X}

∼

{\displaystyle \sim }

G

P

D

{\displaystyle GPD}

(

{\displaystyle (}

μ

=

0

{\displaystyle \mu =0}

σ

{\displaystyle \sigma }

ξ

{\displaystyle \xi }

)

{\displaystyle )}

Y

=

log

(

X

)

∼

e

x

G

P

D

(

σ

,

ξ

)

{\displaystyle Y=\log(X)\sim exGPD(\sigma ,\xi )}

[1] . (exGPD stands for the exponentiated generalized Pareto distribution .)

GPD is similar to the Burr distribution . Generating generalized Pareto random variables

edit

Generating GPD random variables

edit

If U is uniformly distributed on

(0, 1], then

X

=

μ

+

σ

(

U

−

ξ

−

1

)

ξ

∼

G

P

D

(

μ

,

σ

,

ξ

≠

0

)

{\displaystyle X=\mu +{\frac {\sigma (U^{-\xi }-1)}{\xi }}\sim GPD(\mu ,\sigma ,\xi \neq 0)}

and

X

=

μ

−

σ

ln

(

U

)

∼

G

P

D

(

μ

,

σ

,

ξ

=

0

)

.

{\displaystyle X=\mu -\sigma \ln(U)\sim GPD(\mu ,\sigma ,\xi =0).}

Both formulas are obtained by inversion of the cdf.

In Matlab Statistics Toolbox, you can easily use "gprnd" command to generate generalized Pareto random numbers.

GPD as an Exponential-Gamma Mixture

edit

A GPD random variable can also be expressed as an exponential random variable, with a Gamma distributed rate parameter.

X

|

Λ

∼

Exp

(

Λ

)

{\displaystyle X|\Lambda \sim \operatorname {Exp} (\Lambda )}

and

Λ

∼

Gamma

(

α

,

β

)

{\displaystyle \Lambda \sim \operatorname {Gamma} (\alpha ,\beta )}

then

X

∼

GPD

(

ξ

=

1

/

α

,

σ

=

β

/

α

)

{\displaystyle X\sim \operatorname {GPD} (\xi =1/\alpha ,\ \sigma =\beta /\alpha )}

Notice however, that since the parameters for the Gamma distribution must be greater than zero, we obtain the additional restrictions that:

ξ

{\displaystyle \xi }

In addition to this mixture (or compound) expression, the generalized Pareto distribution can also be expressed as a simple ratio. Concretely, for

Y

∼

Exponential

(

1

)

{\displaystyle Y\sim {\text{Exponential}}(1)}

Z

∼

Gamma

(

1

/

ξ

,

1

)

{\displaystyle Z\sim {\text{Gamma}}(1/\xi ,1)}

μ

+

σ

Y

ξ

Z

∼

GPD

(

μ

,

σ

,

ξ

)

{\displaystyle \mu +\sigma {\frac {Y}{\xi Z}}\sim {\text{GPD}}(\mu ,\sigma ,\xi )}

β

=

α

{\displaystyle \beta =\alpha }

Exponentiated generalized Pareto distribution

edit

edit

The pdf of the

e

x

G

P

D

(

σ

,

ξ

)

{\displaystyle exGPD(\sigma ,\xi )}

σ

{\displaystyle \sigma }

ξ

{\displaystyle \xi }

If

X

∼

G

P

D

{\displaystyle X\sim GPD}

(

{\displaystyle (}

μ

=

0

{\displaystyle \mu =0}

σ

{\displaystyle \sigma }

ξ

{\displaystyle \xi }

)

{\displaystyle )}

Y

=

log

(

X

)

{\displaystyle Y=\log(X)}

exponentiated generalized Pareto distribution

Y

{\displaystyle Y}

∼

{\displaystyle \sim }

e

x

G

P

D

{\displaystyle exGPD}

(

{\displaystyle (}

σ

{\displaystyle \sigma }

ξ

{\displaystyle \xi }

)

{\displaystyle )}

The probability density function (pdf) of

Y

{\displaystyle Y}

∼

{\displaystyle \sim }

e

x

G

P

D

{\displaystyle exGPD}

(

{\displaystyle (}

σ

{\displaystyle \sigma }

ξ

{\displaystyle \xi }

)

(

σ

>

0

)

{\displaystyle )\,\,(\sigma >0)}

g

(

σ

,

ξ

)

(

y

)

=

{

e

y

σ

(

1

+

ξ

e

y

σ

)

−

1

/

ξ

−

1

for

ξ

≠

0

,

1

σ

e

y

−

e

y

/

σ

for

ξ

=

0

,

{\displaystyle g_{(\sigma ,\xi )}(y)={\begin{cases}{\frac {e^{y}}{\sigma }}{\bigg (}1+{\frac {\xi e^{y}}{\sigma }}{\bigg )}^{-1/\xi -1}\,\,\,\,{\text{for }}\xi \neq 0,\\{\frac {1}{\sigma }}e^{y-e^{y}/\sigma }\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,{\text{for }}\xi =0,\end{cases}}}

where the support is

−

∞

<

y

<

∞

{\displaystyle -\infty <y<\infty }

ξ

≥

0

{\displaystyle \xi \geq 0}

−

∞

<

y

≤

log

(

−

σ

/

ξ

)

{\displaystyle -\infty <y\leq \log(-\sigma /\xi )}

ξ

<

0

{\displaystyle \xi <0}

For all

ξ

{\displaystyle \xi }

log

σ

{\displaystyle \log \sigma }

ξ

{\displaystyle \xi }

The exGPD has finite moments of all orders for all

σ

>

0

{\displaystyle \sigma >0}

−

∞

<

ξ

<

∞

{\displaystyle -\infty <\xi <\infty }

The variance of the

e

x

G

P

D

(

σ

,

ξ

)

{\displaystyle exGPD(\sigma ,\xi )}

ξ

{\displaystyle \xi }

ξ

{\displaystyle \xi }

ξ

=

0

{\displaystyle \xi =0}

ψ

′

(

1

)

=

π

2

/

6

{\displaystyle \psi '(1)=\pi ^{2}/6}

The moment-generating function of

Y

∼

e

x

G

P

D

(

σ

,

ξ

)

{\displaystyle Y\sim exGPD(\sigma ,\xi )}

M

Y

(

s

)

=

E

[

e

s

Y

]

=

{

−

1

ξ

(

−

σ

ξ

)

s

B

(

s

+

1

,

−

1

/

ξ

)

for

s

∈

(

−

1

,

∞

)

,

ξ

<

0

,

1

ξ

(

σ

ξ

)

s

B

(

s

+

1

,

1

/

ξ

−

s

)

for

s

∈

(

−

1

,

1

/

ξ

)

,

ξ

>

0

,

σ

s

Γ

(

1

+

s

)

for

s

∈

(

−

1

,

∞

)

,

ξ

=

0

,

{\displaystyle M_{Y}(s)=E[e^{sY}]={\begin{cases}-{\frac {1}{\xi }}{\bigg (}-{\frac {\sigma }{\xi }}{\bigg )}^{s}B(s+1,-1/\xi )\,\,\,\,\,\,\,\,\,\,\,\,{\text{for }}s\in (-1,\infty ),\xi <0,\\{\frac {1}{\xi }}{\bigg (}{\frac {\sigma }{\xi }}{\bigg )}^{s}B(s+1,1/\xi -s)\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,{\text{for }}s\in (-1,1/\xi ),\xi >0,\\\sigma ^{s}\Gamma (1+s)\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,{\text{for }}s\in (-1,\infty ),\xi =0,\end{cases}}}

where

B

(

a

,

b

)

{\displaystyle B(a,b)}

Γ

(

a

)

{\displaystyle \Gamma (a)}

beta function and gamma function , respectively.

The expected value of

Y

{\displaystyle Y}

∼

{\displaystyle \sim }

e

x

G

P

D

{\displaystyle exGPD}

(

{\displaystyle (}

σ

{\displaystyle \sigma }

ξ

{\displaystyle \xi }

)

{\displaystyle )}

σ

{\displaystyle \sigma }

ξ

{\displaystyle \xi }

ξ

{\displaystyle \xi }

digamma function :

E

[

Y

]

=

{

log

(

−

σ

ξ

)

+

ψ

(

1

)

−

ψ

(

−

1

/

ξ

+

1

)

for

ξ

<

0

,

log

(

σ

ξ

)

+

ψ

(

1

)

−

ψ

(

1

/

ξ

)

for

ξ

>

0

,

log

σ

+

ψ

(

1

)

for

ξ

=

0.

{\displaystyle E[Y]={\begin{cases}\log \ {\bigg (}-{\frac {\sigma }{\xi }}{\bigg )}+\psi (1)-\psi (-1/\xi +1)\,\,\,\,\,\,\,\,\,\,\,\,\,\,{\text{for }}\xi <0,\\\log \ {\bigg (}{\frac {\sigma }{\xi }}{\bigg )}+\psi (1)-\psi (1/\xi )\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,{\text{for }}\xi >0,\\\log \sigma +\psi (1)\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,{\text{for }}\xi =0.\end{cases}}}

Note that for a fixed value for the

ξ

∈

(

−

∞

,

∞

)

{\displaystyle \xi \in (-\infty ,\infty )}

log

σ

{\displaystyle \log \ \sigma }

The variance of

Y

{\displaystyle Y}

∼

{\displaystyle \sim }

e

x

G

P

D

{\displaystyle exGPD}

(

{\displaystyle (}

σ

{\displaystyle \sigma }

ξ

{\displaystyle \xi }

)

{\displaystyle )}

ξ

{\displaystyle \xi }

polygamma function of order 1 (also called the trigamma function ):

V

a

r

[

Y

]

=

{

ψ

′

(

1

)

−

ψ

′

(

−

1

/

ξ

+

1

)

for

ξ

<

0

,

ψ

′

(

1

)

+

ψ

′

(

1

/

ξ

)

for

ξ

>

0

,

ψ

′

(

1

)

for

ξ

=

0.

{\displaystyle Var[Y]={\begin{cases}\psi '(1)-\psi '(-1/\xi +1)\,\,\,\,\,\,\,\,\,\,\,\,\,{\text{for }}\xi <0,\\\psi '(1)+\psi '(1/\xi )\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,{\text{for }}\xi >0,\\\psi '(1)\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,{\text{for }}\xi =0.\end{cases}}}

See the right panel for the variance as a function of

ξ

{\displaystyle \xi }

ψ

′

(

1

)

=

π

2

/

6

≈

1.644934

{\displaystyle \psi '(1)=\pi ^{2}/6\approx 1.644934}

Note that the roles of the scale parameter

σ

{\displaystyle \sigma }

ξ

{\displaystyle \xi }

Y

∼

e

x

G

P

D

(

σ

,

ξ

)

{\displaystyle Y\sim exGPD(\sigma ,\xi )}

ξ

{\displaystyle \xi }

X

∼

G

P

D

(

σ

,

ξ

)

{\displaystyle X\sim GPD(\sigma ,\xi )}

[2] . The roles of the two parameters are associated each other under

X

∼

G

P

D

(

μ

=

0

,

σ

,

ξ

)

{\displaystyle X\sim GPD(\mu =0,\sigma ,\xi )}

V

a

r

(

X

)

{\displaystyle Var(X)}

edit

Assume that

X

1

:

n

=

(

X

1

,

⋯

,

X

n

)

{\displaystyle X_{1:n}=(X_{1},\cdots ,X_{n})}

n

{\displaystyle n}

heavy-tailed distribution

F

{\displaystyle F}

1

/

ξ

{\displaystyle 1/\xi }

ξ

{\displaystyle \xi }

F

¯

(

x

)

=

1

−

F

(

x

)

=

L

(

x

)

⋅

x

−

1

/

ξ

,

for some

ξ

>

0

,

where

L

is a slowly varying function.

{\displaystyle {\bar {F}}(x)=1-F(x)=L(x)\cdot x^{-1/\xi },\,\,\,\,\,{\text{for some }}\xi >0,\,\,{\text{where }}L{\text{ is a slowly varying function.}}}

It is of a particular interest in the extreme value theory to estimate the shape parameter

ξ

{\displaystyle \xi }

ξ

{\displaystyle \xi }

Let

F

u

{\displaystyle F_{u}}

Pickands–Balkema–de Haan theorem (Pickands, 1975; Balkema and de Haan, 1974) states that for a large class of underlying distribution functions

F

{\displaystyle F}

u

{\displaystyle u}

F

u

{\displaystyle F_{u}}

ξ

{\displaystyle \xi }

the GPD plays the key role in POT approach.

A renowned estimator using the POT methodology is the Hill's estimator . Technical formulation of the Hill's estimator is as follows. For

1

≤

i

≤

n

{\displaystyle 1\leq i\leq n}

X

(

i

)

{\displaystyle X_{(i)}}

i

{\displaystyle i}

X

1

,

⋯

,

X

n

{\displaystyle X_{1},\cdots ,X_{n}}

Hill's estimator (see page 190 of Reference 5 by Embrechts et al [3] ) based on the

k

{\displaystyle k}

ξ

^

k

Hill

=

ξ

^

k

Hill

(

X

1

:

n

)

=

1

k

−

1

∑

j

=

1

k

−

1

log

(

X

(

j

)

X

(

k

)

)

,

for

2

≤

k

≤

n

.

{\displaystyle {\widehat {\xi }}_{k}^{\text{Hill}}={\widehat {\xi }}_{k}^{\text{Hill}}(X_{1:n})={\frac {1}{k-1}}\sum _{j=1}^{k-1}\log {\bigg (}{\frac {X_{(j)}}{X_{(k)}}}{\bigg )},\,\,\,\,\,\,\,\,{\text{for }}2\leq k\leq n.}

In practice, the Hill estimator is used as follows. First, calculate the estimator

ξ

^

k

Hill

{\displaystyle {\widehat {\xi }}_{k}^{\text{Hill}}}

k

∈

{

2

,

⋯

,

n

}

{\displaystyle k\in \{2,\cdots ,n\}}

{

(

k

,

ξ

^

k

Hill

)

}

k

=

2

n

{\displaystyle \{(k,{\widehat {\xi }}_{k}^{\text{Hill}})\}_{k=2}^{n}}

{

ξ

^

k

Hill

}

k

=

2

n

{\displaystyle \{{\widehat {\xi }}_{k}^{\text{Hill}}\}_{k=2}^{n}}

k

{\displaystyle k}

ξ

{\displaystyle \xi }

X

1

,

⋯

,

X

n

{\displaystyle X_{1},\cdots ,X_{n}}

ξ

{\displaystyle \xi }

[4] .

Note that the Hill estimator

ξ

^

k

Hill

{\displaystyle {\widehat {\xi }}_{k}^{\text{Hill}}}

X

1

:

n

=

(

X

1

,

⋯

,

X

n

)

{\displaystyle X_{1:n}=(X_{1},\cdots ,X_{n})}

Pickand's estimator

ξ

^

k

Pickand

{\displaystyle {\widehat {\xi }}_{k}^{\text{Pickand}}}

[5] .)

^ a b Norton, Matthew; Khokhlov, Valentyn; Uryasev, Stan (2019). "Calculating CVaR and bPOE for common probability distributions with application to portfolio optimization and density estimation" (PDF) . Annals of Operations Research . 299 (1–2). Springer: 1281–1315. arXiv :1811.11301 doi :10.1007/s10479-019-03373-1 . S2CID 254231768 . Archived from the original (PDF) on 2023-03-31. Retrieved 2023-02-27 . ^ Coles, Stuart (2001-12-12). An Introduction to Statistical Modeling of Extreme Values ISBN 9781852334598 ^ Dargahi-Noubary, G. R. (1989). "On tail estimation: An improved method". Mathematical Geology . 21 (8): 829–842. Bibcode :1989MatGe..21..829D . doi :10.1007/BF00894450 . S2CID 122710961 . ^ Hosking, J. R. M.; Wallis, J. R. (1987). "Parameter and Quantile Estimation for the Generalized Pareto Distribution". Technometrics . 29 (3): 339–349. doi :10.2307/1269343 . JSTOR 1269343 . ^ Davison, A. C. (1984-09-30). "Modelling Excesses over High Thresholds, with an Application" . In de Oliveira, J. Tiago (ed.). Statistical Extremes and Applications . Kluwer. p. 462. ISBN 9789027718044 ^ Embrechts, Paul; Klüppelberg, Claudia ; Mikosch, Thomas (1997-01-01). Modelling extremal events for insurance and finance ISBN 9783540609315 ^ Castillo, Enrique, and Ali S. Hadi. "Fitting the generalized Pareto distribution to data." Journal of the American Statistical Association 92.440 (1997): 1609-1620.

Pickands, James (1975). "Statistical inference using extreme order statistics" (PDF) . Annals of Statistics . 3 s : 119–131. doi :10.1214/aos/1176343003 Balkema, A.; De Haan, Laurens (1974). "Residual life time at great age" . Annals of Probability . 2 (5): 792–804. doi :10.1214/aop/1176996548 Lee, Seyoon; Kim, J.H.K. (2018). "Exponentiated generalized Pareto distribution:Properties and applications towards extreme value theory". Communications in Statistics - Theory and Methods . 48 (8): 1–25. arXiv :1708.01686 doi :10.1080/03610926.2018.1441418 . S2CID 88514574 . N. L. Johnson; S. Kotz; N. Balakrishnan (1994). Continuous Univariate Distributions Volume 1, second edition . New York: Wiley. ISBN 978-0-471-58495-7 Barry C. Arnold (2011). "Chapter 7: Pareto and Generalized Pareto Distributions" . In Duangkamon Chotikapanich (ed.). Modeling Distributions and Lorenz Curves . New York: Springer. ISBN 9780387727967 Arnold, B. C.; Laguna, L. (1977). On generalized Pareto distributions with applications to income data . Ames, Iowa: Iowa State University, Department of Economics.

![{\displaystyle e^{\theta \mu }\,\sum _{j=0}^{\infty }\left[{\frac {(\theta \sigma )^{j}}{\prod _{k=0}^{j}(1-k\xi )}}\right],\;(k\xi <1)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/41cf9f358ac58dcba4130cba492879256576e783)

![{\displaystyle e^{it\mu }\,\sum _{j=0}^{\infty }\left[{\frac {(it\sigma )^{j}}{\prod _{k=0}^{j}(1-k\xi )}}\right],\;(k\xi <1)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/53bfef161abce3834ebc5908620389e3174d612f)

![{\displaystyle \xi ={\frac {1}{2}}\left(1-{\frac {(E[X]-\mu )^{2}}{V[X]}}\right)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/029894dab6a61a875e17d8ee5f27c7fe52dc4a89)

![{\displaystyle \sigma =(E[X]-\mu )(1-\xi )}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7ae5aff7c32202ca44e85df4abac26bc3e6deb14)

![{\displaystyle {\begin{cases}\mu +\sigma \left[{\frac {(1-p)^{-\xi }}{1-\xi }}+{\frac {(1-p)^{-\xi }-1}{\xi }}\right]&,\xi \neq 0\\\mu +\sigma [1-\ln(1-p)]&,\xi =0\end{cases}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6bc74279810afb129d46a109e805e2080a8a0c33)