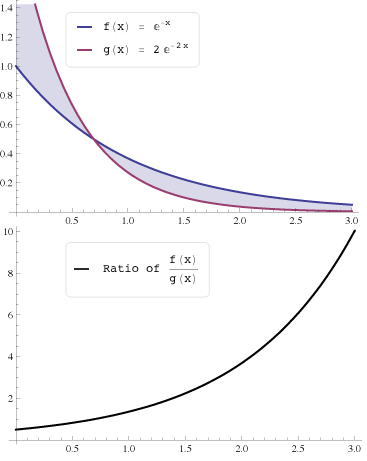

In statistics, the monotone likelihood ratio property is a property of the ratio of two probability density functions (PDFs). Formally, distributions and bear the property if

The ratio of the density functions above is monotone in the parameter so satisfies the monotone likelihood ratio property.

that is, if the ratio is nondecreasing in the argument .

If the functions are first-differentiable, the property may sometimes be stated

For two distributions that satisfy the definition with respect to some argument we say they "have the MLRP in " For a family of distributions that all satisfy the definition with respect to some statistic we say they "have the MLR in "

Intuition

editThe MLRP is used to represent a data-generating process that enjoys a straightforward relationship between the magnitude of some observed variable and the distribution it draws from. If satisfies the MLRP with respect to , the higher the observed value , the more likely it was drawn from distribution rather than As usual for monotonic relationships, the likelihood ratio's monotonicity comes in handy in statistics, particularly when using maximum-likelihood estimation. Also, distribution families with MLR have a number of well-behaved stochastic properties, such as first-order stochastic dominance and increasing hazard ratios. Unfortunately, as is also usual, the strength of this assumption comes at the price of realism. Many processes in the world do not exhibit a monotonic correspondence between input and output.

Example: Working hard or slacking off

editSuppose you are working on a project, and you can either work hard or slack off. Call your choice of effort and the quality of the resulting project If the MLRP holds for the distribution of conditional on your effort , the higher the quality the more likely you worked hard. Conversely, the lower the quality the more likely you slacked off.

- 1: Choose effort where means high effort, and means low effort.

- 2: Observe drawn from By Bayes' law with a uniform prior,

- 3: Suppose satisfies the MLRP. Rearranging, the probability the worker worked hard is

- which, thanks to the MLRP, is monotonically increasing in (because is decreasing in ).

Hence if some employer is doing a "performance review" he can infer his employee's behavior from the merits of his work.

Families of distributions satisfying MLR

editStatistical models often assume that data are generated by a distribution from some family of distributions and seek to determine that distribution. This task is simplified if the family has the monotone likelihood ratio property (MLRP).

A family of density functions indexed by a parameter taking values in an ordered set is said to have a monotone likelihood ratio (MLR) in the statistic if for any

- is a non-decreasing function of

Then we say the family of distributions "has MLR in ".

List of families

edit| Family | in which has the MLR |

|---|---|

| Exponential | observations |

| Binomial | observations |

| Poisson | observations |

| Normal | if known, observations |

Hypothesis testing

editIf the family of random variables has the MLRP in a uniformly most powerful test can easily be determined for the hypothesis versus

Example: Effort and output

editExample: Let be an input into a stochastic technology – worker's effort, for instance – and its output, the likelihood of which is described by a probability density function Then the monotone likelihood ratio property (MLRP) of the family is expressed as follows: For any the fact that implies that the ratio is increasing in

Relation to other statistical properties

editMonotone likelihoods are used in several areas of statistical theory, including point estimation and hypothesis testing, as well as in probability models.

Exponential families

editOne-parameter exponential families have monotone likelihood-functions. In particular, the one-dimensional exponential family of probability density functions or probability mass functions with

has a monotone non-decreasing likelihood ratio in the sufficient statistic provided that is non-decreasing.

Uniformly most powerful tests: The Karlin–Rubin theorem

editMonotone likelihood functions are used to construct uniformly most powerful tests, according to the Karlin–Rubin theorem.[1] Consider a scalar measurement having a probability density function parameterized by a scalar parameter and define the likelihood ratio If is monotone non-decreasing, in for any pair (meaning that the greater is, the more likely is), then the threshold test:

- where is chosen so that

is the UMP test of size for testing vs.

Note that exactly the same test is also UMP for testing vs.

Median unbiased estimation

editMonotone likelihood-functions are used to construct median-unbiased estimators, using methods specified by Johann Pfanzagl and others.[2][3] One such procedure is an analogue of the Rao–Blackwell procedure for mean-unbiased estimators: The procedure holds for a smaller class of probability distributions than does the Rao–Blackwell procedure for mean-unbiased estimation but for a larger class of loss functions.[3]: 713

Lifetime analysis: Survival analysis and reliability

editIf a family of distributions has the monotone likelihood ratio property in

- the family has monotone decreasing hazard rates in (but not necessarily in )

- the family exhibits the first-order (and hence second-order) stochastic dominance in and the best Bayesian update of is increasing in .

But not conversely: neither monotone hazard rates nor stochastic dominance imply the MLRP.

Proofs

editLet distribution family satisfy MLR in so that for and

or equivalently:

Integrating this expression twice, we obtain:

| 1. To with respect to

integrate and rearrange to obtain |

2. From with respect to

integrate and rearrange to obtain |

First-order stochastic dominance

editCombine the two inequalities above to get first-order dominance:

Monotone hazard rate

editUse only the second inequality above to get a monotone hazard rate:

Uses

editEconomics

editThe MLR is an important condition on the type distribution of agents in mechanism design and economics of information, where Paul Milgrom defined "favorableness" of signals (in terms of stochastic dominance) as a consequence of MLR.[4] Most solutions to mechanism design models assume type distributions that satisfy the MLR to take advantage of solution methods that may be easier to apply and interpret.

References

edit- ^ Casella, G.; Berger, R.L. (2008). "Theorem 8.3.17". Statistical Inference. Brooks / Cole. ISBN 0-495-39187-5.

- ^ Pfanzagl, Johann (1979). "On optimal median unbiased estimators in the presence of nuisance parameters". Annals of Statistics. 7 (1): 187–193. doi:10.1214/aos/1176344563.

- ^ a b Brown, L.D.; Cohen, Arthur; Strawderman, W.E. (1976). "A complete class theorem for strict monotone likelihood ratio with applications". Annals of Statistics. 4 (4): 712–722. doi:10.1214/aos/1176343543.

- ^ Milgrom, P.R. (1981). "Good news and bad news: Representation theorems and applications". The Bell Journal of Economics. 12 (2): 380–391. doi:10.2307/3003562.