| Attribution: see history of Binary search algorithm. |

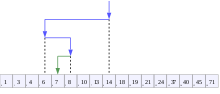

Visualization of the binary search algorithm where 7 is the target value. | |

| Class | Search algorithm |

|---|---|

| Data structure | Array |

| Worst-case performance | O(log n) |

| Best-case performance | O(1) |

| Average performance | O(log n) |

| Worst-case space complexity | O(1) |

| Optimal | Yes |

La recherche dichotomique, ou la recherche logarithmique,[1][2], est un algorithme de recherche pour trouver la position d'un élément spécifique dans un tableau trié.[3][4] La recherche dichotomique compare l'élément spécifique au élément dans le milieu du tableau. Si les éléments sont inégal, la recherche dichotomique élimine la moitié où l'élément est absent. La recherche continue jusqu'à elle trouve l'élément spécifique. Si la recherche ne trouve pas l'élément spécifique, le tableau n'a pas l'élément.

La recherche dichotomique exécute dans le temps logarithmique. L'algorithme compare les éléments O(log n) fois, où n est le nombre des éléments dans le tableau, l'O est la notation du Grand O et log est le logarithme. La recherche dichotomique consomme l'espace constant; l'algorithme consomme la quantité même d'espace pour tous les tableaux.[5] On peut rechercher plus de rapidement les structures spécialisés des donnees qu'ont conçu pour la recherche rapide, comme les tables de hachage. En revanche, la recherche dichotomique applique à beaucoup des problèmes.

L'idée de la recherche dichotomique est facile, mais en programmant correctement la recherche dichotomique demande d'attention aux finesse. En particulier, sa conditions de la exit et le calcul du point médian peuvent être difficile.

Il y a de nombreuses variations de la recherche dichotomique. L'algorithm «fractional cascading» fait la recherche dichotomique plus rapide pour les tableaux multiple. L'algorithm «fractional cascading» applique à beaucoup de problèmes dans la géométrie algorithmique. La recherche exponentielle est une variation de la recherche dichotomique qui fonctionne sur les tableaux avez un nombre infini d'éléments. The binary search tree and B-tree data structures are based on binary search.

Algorithm

editLa recherche dichotomique fonctionne sur les tableaux triée. Premièrement, la recherche dichotomique compare l'élément dans le milieu avec . If the target value matches the middle element, its position in the array is returned. If the target value is less than or greater than the middle element, the search continues in the lower or upper half of the array, respectively, eliminating the other half from consideration.[6]

Procedure

editGiven an array A of n elements with values or records A0, A1, ..., An−1, sorted such that A0 ≤ A1 ≤ ... ≤ An−1, and target value T, the following subroutine uses binary search to find the index of T in A.[6]

- Set L to 0 and R to n − 1.

- If L > R, the search terminates as unsuccessful.

- Set m (the position of the middle element) to the floor (the largest previous integer) of (L + R) / 2.

- If Am < T, set L to m + 1 and go to step 2.

- If Am > T, set R to m − 1 and go to step 2.

- Now Am = T, the search is done; return m.

This iterative procedure keeps track of the search boundaries with two variables. Some implementations may check whether the middle element is equal to the target at the end of the procedure. This results in a faster comparison loop, but requires one more iteration on average.[7]

Approximate matches

editThe above procedure only performs exact matches, finding the position of a target value. However, due to the ordered nature of sorted arrays, it is trivial to extend binary search to perform approximate matches. For example, binary search can be used to compute, for a given value, its rank (the number of smaller elements), predecessor (next-smallest element), successor (next-largest element), and nearest neighbor. Range queries seeking the number of elements between two values can be performed with two rank queries.[8]

- Rank queries can be performed using a modified version of binary search. By returning m on a successful search, and L on an unsuccessful search, the number of elements less than the target value is returned instead.[8]

- Predecessor and successor queries can be performed with rank queries. Once the rank of the target value is known, its predecessor is the element at the position given by its rank (as it is the largest element that is smaller than the target value). Its successor is the element after it (if it is present in the array) or at the next position after the predecessor (otherwise).[9] The nearest neighbor of the target value is either its predecessor or successor, whichever is closer.

- Range queries are also straightforward. Once the ranks of the two values are known, the number of elements greater than or equal to the first value and less than the second is the difference of the two ranks. This count can be adjusted up or down by one according to whether the endpoints of the range should be considered to be part of the range and whether the array contains keys matching those endpoints.[10]

Performance

editThe performance of binary search can be analyzed by reducing the procedure to a binary comparison tree, where the root node is the middle element of the array; the middle element of the lower half is left of the root and the middle element of the upper half is right of the root. The rest of the tree is built in a similar fashion. This model represents binary search; starting from the root node, the left or right subtrees are traversed depending on whether the target value is less or more than the node under consideration, representing the successive elimination of elements.[5][11]

The worst case is iterations of the comparison loop, where the notation denotes the floor function that rounds its argument to the next-smallest integer and log2 is the binary logarithm. The worst case is reached when the search reaches the deepest level of the tree, equivalent to a binary search that has reduced to one element and, in each iteration, always eliminates the smaller subarray out of the two if they are not of equal size.[a][11]

On average, assuming that each element is equally likely to be searched, the procedure will most likely find the target value the second-deepest level of the tree. This is equivalent to a binary search that completes one iteration before the worst case, reached after iterations. However, the tree may be unbalanced, with the deepest level partially filled, and equivalently, the array may not be divided perfectly by the search in some iterations, half of the time resulting in the smaller subarray being eliminated. The actual number of average iterations is slightly higher, at iterations.[5] In the best case, where target value is the middle element of the array, its position is returned after one iteration.[12] In terms of iterations, no search algorithm that works only by comparing elements can exhibit better average and worst-case performance than binary search.[11]

Each iteration of the binary search procedure defined above makes one or two comparisons, checking if the middle element is equal to the target in each iteration. Again assuming that each element is equally likely to be searched, each iteration makes 1.5 comparisons on average. A variation of the algorithm checks whether the middle element is equal to the target at the end of the search, eliminating on average half a comparison from each iteration. This slightly cuts the time taken per iteration on most computers, while guaranteeing that the search takes the maximum number of iterations, on average adding one iteration to the search. Because the comparison loop is performed only times in the worst case, for all but enormous , the slight increase in comparison loop efficiency does not compensate for the extra iteration. Knuth 1998 gives a value of (more than 73 quintillion)[13] elements for this variation to be faster.[b][14][15]

Fractional cascading can be used to speed up searches of the same value in multiple arrays. Where is the number of arrays, searching each array for the target value takes time; fractional cascading reduces this to .[16]

Binary search versus other schemes

editSorted arrays with binary search are a very inefficient solution when insertion and deletion operations are interleaved with retrieval, taking time for each such operation, and complicating memory use.[17] Other data structures support much more efficient insertion and deletion, and also fast exact matching. However, binary search applies to a wide range of search problems, usually solving them in time regardless of the type or structure of the values themselves.

Hashing

editFor implementing associative arrays, hash tables, a data structure that maps keys to records using a hash function, are generally faster than binary search on a sorted array of records;[18] most implementations require only amortized constant time on average.[c][20] However, hashing is not useful for approximate matches, such as computing the next-smallest, next-largest, and nearest key, as the only information given on a failed search is that the target is not present in any record.[21] Binary search is ideal for such matches, performing them in logarithmic time. In addition, all operations possible on a sorted array can be performed—such as finding the smallest and largest key and performing range searches.[22]

Trees

editA binary search tree is a binary tree data structure that works based on the principle of binary search. The records of the tree are arranged in sorted order, and each record in the tree can be searched using an algorithm similar to binary search, taking on average logarithmic time. Insertion and deletion also require on average logarithmic time in binary search trees. This can faster than the linear time insertion and deletion of sorted arrays, and binary trees retain the ability to perform all the operations possible on a sorted array, including range and approximate queries.[23]

However, binary search is usually more efficient for searching as binary search trees will most likely be imperfectly balanced, resulting in slightly worse performance than binary search. This applies even to balanced binary search trees, binary search trees that balance their own nodes—as they rarely produce optimally-balanced trees—but to a lesser extent. Although unlikely, the tree may be severely imbalanced with few internal nodes with two children, resulting in the average and worst-case search time approaching comparisons.[d] Binary search trees take more space than sorted arrays.[25]

Binary search trees lend themselves to fast searching in external memory stored in hard disks, as binary search trees can effectively be structured in filesystems. The B-tree generalizes this method of tree organization; B-trees are frequently used to organize long-term storage such as databases and filesystems.[26][27]

Linear search

editLinear search is a simple search algorithm that checks every record until it finds the target value. Linear search can be done on a linked list, which allows for faster insertion and deletion than an array. Binary search is faster than linear search for sorted arrays except if the array is short.[e][29] If the array must first be sorted, that cost must be amortized over any searches. Sorting the array also enables efficient approximate matches and other operations.[30]

Mixed approaches

editThe Judy array uses a combination of approaches to provide a highly efficient solution.

Set membership algorithms

editA related problem to search is set membership. Any algorithm that does lookup, like binary search, can also be used for set membership. There are other algorithms that are more specifically suited for set membership. A bit array is the simplest, useful when the range of keys is limited; it is very fast, requiring only time. The Judy1 type of Judy array handles 64-bit keys efficiently.

For approximate results, Bloom filters, another probabilistic data structure based on hashing, store a set of keys by encoding the keys using a bit array and multiple hash functions. Bloom filters are much more space-efficient than bitarrays in most cases and not much slower: with hash functions, membership queries require only time. However, Bloom filters suffer from false positives.[f][g][32]

Other data structures

editThere exist data structures that may improve on binary search in some cases for both searching and other operations available for sorted arrays. For example, searches, approximate matches, and the operations available to sorted arrays can be performed more efficiently than binary search on specialized data structures such as van Emde Boas trees, fusion trees, tries, and bit arrays. However, while these operations can always be done at least efficiently on a sorted array regardless of the keys, such data structures are usually only faster because they exploit the properties of keys with a certain attribute (usually keys that are small integers), and thus will be time or space consuming for keys that lack that attribute.[22]

Variations

editUniform binary search

editUniform binary search stores, instead of the lower and upper bounds, the index of the middle element and the number of elements around the middle element that were not eliminated yet. Each step reduces the width by about half. This variation is uniform because the difference between the indices of middle elements and the preceding middle elements chosen remains constant between searches of arrays of the same length.[33]

Fibonacci search

editFibonacci search is a method similar to binary search that successively shortens the interval in which the maximum of a unimodal function lies. Given a finite interval, a unimodal function, and the maximum length of the resulting interval, Fibonacci search finds a Fibonacci number such that if the interval is divided equally into that many subintervals, the subintervals would be shorter than the maximum length. After dividing the interval, it eliminates the subintervals in which the maximum cannot lie until one or more contiguous subintervals remain.[34][35]

Exponential search

editExponential search extends binary search to unbounded lists. It starts by finding the first element with an index that is both a power of two and greater than the target value. Afterwards, it sets that index as the upper bound, and switches to binary search. A search takes iterations of the exponential search and at most iterations of the binary search, where is the position of the target value. Exponential search works on bounded lists, but becomes an improvement over binary search only if the target value lies near beginning of the array.[36]

Interpolation search

editInstead of merely calculating the midpoint, interpolation search estimates the position of the target value, taking into account the lowest and highest elements in the array and the length of the array. This is only possible if the array elements are numbers. It works on the basis that the midpoint is not the best guess in many cases; for example, if the target value is close to the highest element in the array, it is likely to be located near the end of the array.[37] When the distribution of the array elements is uniform or near uniform, it makes comparisons.[37][38][39]

In practice, interpolation search is slower than binary search for small arrays, as interpolation search requires extra computation, and the slower growth rate of its time complexity compensates for this only for large arrays.[37]

Fractional cascading

editFractional cascading is a technique that speeds up binary searches for the same element for both exact and approximate matching in "catalogs" (arrays of sorted elements) associated with vertices in graphs. Searching each catalog separately requires time, where is the number of catalogs. Fractional cascading reduces this to by storing specific information in each catalog about other catalogs.[16]

Fractional cascading was originally developed to efficiently solve various computational geometry problems, but it also has been applied elsewhere, in domains such as data mining and Internet Protocol routing.[16]

History

editIn 1946, John Mauchly made the first mention of binary search as part of the Moore School Lectures, the first ever set of lectures regarding any computer-related topic.[40] Every published binary search algorithm worked only for arrays whose length is one less than a power of two[h] until 1960, when Derrick Henry Lehmer published a binary search algorithm that worked on all arrays.[42] In 1962, Hermann Bottenbruch presented an ALGOL 60 implementation of binary search that placed the comparison for equality at the end, increasing the average number of iterations by one, but reducing to one the number of comparisons per iteration.[7] The uniform binary search was presented to Donald Knuth in 1971 by A. K. Chandra of Stanford University and published in Knuth's The Art of Computer Programming.[40] In 1986, Bernard Chazelle and Leonidas J. Guibas introduced fractional cascading as a method to solve numerous search problems in computational geometry.[16][43][44]

Implementation issues

editAlthough the basic idea of binary search is comparatively straightforward, the details can be surprisingly tricky ... — Donald Knuth[2]

When Jon Bentley assigned binary search as a problem in a course for professional programmers, he found that ninety percent failed to provide a correct solution after several hours of working on it,[45] and another study published in 1988 shows that accurate code for it is only found in five out of twenty textbooks.[46] Furthermore, Bentley's own implementation of binary search, published in his 1986 book Programming Pearls, contained an overflow error that remained undetected for over twenty years. The Java programming language library implementation of binary search had the same overflow bug for more than nine years.[47]

In a practical implementation, the variables used to represent the indices will often be of fixed size, and this can result in an arithmetic overflow for very large arrays. If the midpoint of the span is calculated as (L + R) / 2, then the value of L + R may exceed the range of integers of the data type used to store the midpoint, even if L and R are within the range. If L and R are nonnegative, this can be avoided by calculating the midpoint as L + (R − L) / 2.[48]

If the target value is greater than the greatest value in the array, and the last index of the array is the maximum representable value of L, the value of L will eventually become too large and overflow. A similar problem will occur if the target value is smaller than the least value in the array and the first index of the array is the smallest representable value of R. In particular, this means that R must not be an unsigned type if the array starts with index 0.

An infinite loop may occur if the exit conditions for the loop are not defined correctly. Once L exceeds R, the search has failed and must convey the failure of the search. In addition, the loop must be exited when the target element is found, or in the case of an implementation where this check is moved to the end, checks for whether the search was successful or failed at the end must be in place. Bentley found that, in his assignment of binary search, most of the programmers who implemented binary search incorrectly made an error defining the exit conditions.[7][49]

Library support

editMany languages' standard libraries include binary search routines:

- C provides the function

bsearch()in its standard library, which is typically implemented via binary search (although the official standard does not require it so).[50] - C++'s Standard Template Library provides the functions

binary_search(),lower_bound(),upper_bound()andequal_range().[51] - COBOL provides the

SEARCH ALLverb for performing binary searches on COBOL ordered tables.[52] - Java offers a set of overloaded

binarySearch()static methods in the classesArraysandCollectionsin the standardjava.utilpackage for performing binary searches on Java arrays and onLists, respectively.[53][54] - Microsoft's .NET Framework 2.0 offers static generic versions of the binary search algorithm in its collection base classes. An example would be

System.Array's methodBinarySearch<T>(T[] array, T value).[55] - Python provides the

bisectmodule.[56] - Ruby's Array class includes a

bsearchmethod with built-in approximate matching.[57] - Go's

sortstandard library package contains the functionsSearch,SearchInts,SearchFloat64s, andSearchStrings, which implement general binary search, as well as specific implementations for searching slices of integers, floating-point numbers, and strings, respectively.[58] - For Objective-C, the Cocoa framework provides the NSArray -indexOfObject:inSortedRange:options:usingComparator: method in Mac OS X 10.6+.[59] Apple's Core Foundation C framework also contains a CFArrayBSearchValues() function.[60]

See also

edit- Bisection method – the same idea used to solve equations in the real numbers

- Multiplicative binary search - binary search variation with simplified midpoint calculation

Notes and references

editNotes

edit- ^ This happens as binary search will not always divide the array perfectly. Take for example the array [1, 2 ... 16]. The first iteration will select the midpoint of 8. On the left subarray are eight elements, but on the right are nine. If the search takes the right path, there is a higher chance that the search will make the maximum number of comparisons.[11]

- ^ Knuth showed on his MIX computer model, intended to represent an ordinary computer, that the average running time of this variation for a successful search is units of time compared to units for regular binary search. The time complexity for this variation grows slightly more slowly, but at the cost of higher initial complexity.[14]

- ^ It is possible to perform hashing in guaranteed constant time.[19]

- ^ The worst binary search tree for searching can be produced by inserting the values in sorted or near-sorted order or in an alternating lowest-highest record pattern.[24]

- ^ Knuth 1998 performed a formal time performance analysis of both of these search algorithms. On Knuth's hypothetical MIX computer, intended to represent an ordinary computer, binary search takes on average units of time for a successful search, while linear search with a sentinel node at the end of the list takes units. Linear search has lower initial complexity because it requires minimal computation, but it quickly outgrows binary search in complexity. On the MIX computer, binary search only outperforms linear search with a sentinel if .[11][28]

- ^ As simply setting all of the bits which the hash functions point to for a specific key can affect queries for other keys which have a common hash location for one or more of the functions.[31]

- ^ There exist improvements of the Bloom filter which improve on its complexity or support deletion; for example, the cuckoo filter exploits cuckoo hashing to gain these advantages.[31]

- ^ That is, arrays of length 1, 3, 7, 15, 31 ...[41]

Citations

edit- ^ Willams, Jr., Louis F. (1975). "A modification to the half-interval search (binary search) method". Proceedings of the 14th annual Southeast regional conference on - ACM-SE 14. Proceedings of the 14th ACM Southeast Conference. pp. 95–101. doi:10.1145/503561.503582.

- ^ a b Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Binary search".

- ^ Cormen et al. 2009, p. 39.

- ^ Weisstein, Eric W. "Binary Search". MathWorld.

- ^ a b c Flores, Ivan; Madpis, George (1971). "Average binary search length for dense ordered lists". CACM. 14 (9): 602–603. doi:10.1145/362663.362752. S2CID 43325465.

- ^ a b Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Algorithm B".

- ^ a b c Bottenbruch, Hermann (1962). "Structure and Use of ALGOL 60". Journal of the ACM. 9 (2): 161–211. doi:10.1145/321119.321120. S2CID 13406983. Procedure is described at p. 214 (§43), titled "Program for Binary Search".

- ^ a b Sedgewick & Wayne 2011, §3.1, subsection "Rank and selection".

- ^ Goldman & Goldman 2008, pp. 461–463.

- ^ Sedgewick & Wayne 2011, §3.1, subsection "Range queries".

- ^ a b c d e Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Further analysis of binary search".

- ^ Chang 2003, p. 169.

- ^ Sloane, Neil. Table of n, 2n for n = 0..1000. Part of OEIS A000079. Retrieved 30 April 2016.

- ^ a b Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Exercise 23".

- ^ Rolfe, Timothy J. (1997). "Analytic derivation of comparisons in binary search". ACM SIGNUM Newsletter. 32 (4): 15–19. doi:10.1145/289251.289255. S2CID 23752485.

- ^ a b c d Chazelle, Bernard; Liu, Ding (2001). "Lower bounds for intersection searching and fractional cascading in higher dimension". Proceedings of the thirty-third annual ACM symposium on Theory of computing - STOC '01. 33rd ACM Symposium on Theory of Computing. pp. 322–329. doi:10.1145/380752.380818. ISBN 1581133499.

- ^ Knuth 1997, §2.2.2 ("Sequential Allocation").

- ^ Knuth 1998, §6.4 ("Hashing").

- ^ Knuth 1998, §6.4 ("Hashing"), subsection "History".

- ^ Dietzfelbinger, Martin; Karlin, Anna; Mehlhorn, Kurt; Meyer auf der Heide, Friedhelm; Rohnert, Hans; Tarjan, Robert E. (August 1994). "Dynamic Perfect Hashing: Upper and Lower Bounds". SIAM Journal on Computing. 23 (4): 738–761. doi:10.1137/S0097539791194094.

- ^ Morin, Pat. "Hash Tables" (PDF). p. 1. Retrieved 28 March 2016.

- ^ a b Beame, Paul; Fich, Faith E. (2001). "Optimal Bounds for the Predecessor Problem and Related Problems". Journal of Computer and System Sciences. 65 (1): 38–72. doi:10.1006/jcss.2002.1822.

- ^ Sedgewick & Wayne 2011, §3.2 ("Binary Search Trees"), subsection "Order-based methods and deletion".

- ^ Knuth 1998, §6.2.2 ("Binary tree searching"), subsection "But what about the worst case?".

- ^ Sedgewick & Wayne 2011, §3.5 ("Applications"), "Which symbol-table implementation should I use?".

- ^ Knuth 1998, §5.4.9 ("Disks and Drums").

- ^ Knuth 1998, §6.2.4 ("Multiway trees").

- ^ Knuth 1998, Answers to Exercises (§6.2.1) for "Exercise 5".

- ^ Knuth 1998, §6.2.1 ("Searching an ordered table").

- ^ Sedgewick & Wayne 2011, §3.2 ("Ordered symbol tables").

- ^ a b Fan, Bin; Andersen, Dave G.; Kaminsky, Michael; Mitzenmacher, Michael D. (2014). Cuckoo Filter: Practically Better Than Bloom. Proceedings of the 10th ACM International on Conference on emerging Networking Experiments and Technologies. pp. 75–88. doi:10.1145/2674005.2674994.

- ^ Bloom, Burton H. (1970). "Space/time Trade-offs in Hash Coding with Allowable Errors". CACM. 13 (7): 422–426. doi:10.1145/362686.362692. S2CID 7931252.

- ^ Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "An important variation".

- ^ Kiefer, J. (1953). "Sequential Minimax Search for a Maximum". Proceedings of the American Mathematical Society. 4 (3): 502–506. doi:10.2307/2032161. JSTOR 2032161.

- ^ Hassin, Refael (1981). "On Maximizing Functions by Fibonacci Search". Fibonacci Quarterly. 19: 347–351.

- ^ Moffat & Turpin 2002, p. 33.

- ^ a b c Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Interpolation search".

- ^ Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Exercise 22".

- ^ Perl, Yehoshua; Itai, Alon; Avni, Haim (1978). "Interpolation search—a log log n search". CACM. 21 (7): 550–553. doi:10.1145/359545.359557. S2CID 11089655.

- ^ a b Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "History and bibliography".

- ^ "2n−1". OEIS A000225. Retrieved 7 May 2016.

- ^ Lehmer, Derrick (1960). "Teaching combinatorial tricks to a computer". Proceedings of Symposia in Applied Mathematics. 10: 180–181. doi:10.1090/psapm/010. ISBN 9780821813102.

- ^ Chazelle, Bernard; Guibas, Leonidas J. (1986). "Fractional cascading: I. A data structuring technique" (PDF). Algorithmica. 1 (1–4): 133–162. doi:10.1007/BF01840440. S2CID 12745042.

- ^ Chazelle, Bernard; Guibas, Leonidas J. (1986), "Fractional cascading: II. Applications" (PDF), Algorithmica, 1 (1–4): 163–191, doi:10.1007/BF01840441, S2CID 11232235

- ^ Bentley 2000, §4.1 ("The Challenge of Binary Search").

- ^ Pattis, Richard E. (1988). "Textbook errors in binary searching". SIGCSE Bulletin. 20: 190–194. doi:10.1145/52965.53012.

- ^ Bloch, Joshua (2 June 2006). "Extra, Extra – Read All About It: Nearly All Binary Searches and Mergesorts are Broken". Google Research Blog. Retrieved 21 April 2016.

- ^ Ruggieri, Salvatore (2003). "On computing the semi-sum of two integers" (PDF). Information Processing Letters. 87 (2): 67–71. doi:10.1016/S0020-0190(03)00263-1.

- ^ Bentley 2000, §4.4 ("Principles").

- ^ "bsearch – binary search a sorted table". The Open Group Base Specifications (7th ed.). The Open Group. 2013. Retrieved 28 March 2016.

- ^ Stroustrup 2013, §32.6.1 ("Binary Search").

- ^ "The Binary Search in COBOL". The American Programmer. Retrieved 7 November 2016.

- ^ "java.util.Arrays". Java Platform Standard Edition 8 Documentation. Oracle Corporation. Retrieved 1 May 2016.

{{cite web}}: templatestyles stripmarker in|title=at position 1 (help) - ^ "java.util.Collections". Java Platform Standard Edition 8 Documentation. Oracle Corporation. Retrieved 1 May 2016.

{{cite web}}: templatestyles stripmarker in|title=at position 1 (help) - ^ "List<T>.BinarySearch Method (T)". Microsoft Developer Network. Retrieved 10 April 2016.

- ^ "8.5. bisect — Array bisection algorithm". The Python Standard Library. Python Software Foundation. Retrieved 10 April 2016.

- ^ Fitzgerald 2007, p. 152.

- ^ "Package sort". The Go Programming Language. Retrieved 28 April 2016.

{{cite web}}: templatestyles stripmarker in|title=at position 9 (help) - ^ "NSArray". Mac Developer Library. Apple Inc. Retrieved 1 May 2016.

{{cite web}}: templatestyles stripmarker in|title=at position 1 (help) - ^ "CFArray". Mac Developer Library. Apple Inc. Retrieved 1 May 2016.

{{cite web}}: templatestyles stripmarker in|title=at position 1 (help)

Works

edit- Alexandrescu, Andrei (2010). The D Programming Language. Upper Saddle River, NJ: Addison-Wesley Professional. ISBN 978-0-321-63536-5.

- Bentley, Jon (2000) [1986]. Programming Pearls (2nd ed.). Addison-Wesley. ISBN 0-201-65788-0.

- Chang, Shi-Kuo (2003). Data Structures and Algorithms. Vol. 13. Singapore: World Scientific. ISBN 978-981-238-348-8.

{{cite book}}:|work=ignored (help) - Cormen, Thomas H.; Leiserson, Charles E.; Rivest, Ronald L.; Stein, Clifford (2009) [1990]. Introduction to Algorithms (3rd ed.). MIT Press and McGraw-Hill. ISBN 0-262-03384-4.

{{cite book}}: Invalid|ref=harv(help) - Fitzgerald, Michael (2007). Ruby Pocket Reference. Sebastopol, CA: O'Reilly Media. ISBN 978-1-4919-2601-7.

- Goldman, Sally A.; Goldman, Kenneth J. (2008). A Practical Guide to Data Structures and Algorithms using Java. Boca Raton: CRC Press. ISBN 978-1-58488-455-2.

- Knuth, Donald (1997). Fundamental Algorithms. The Art of Computer Programming. Vol. 1 (3rd ed.). Reading, MA: Addison-Wesley Professional.

- Knuth, Donald (1998). Sorting and Searching. The Art of Computer Programming. Vol. 3 (2nd ed.). Reading, MA: Addison-Wesley Professional.

- Leiss, Ernst (2007). A Programmer's Companion to Algorithm Analysis. Boca Raton, FL: CRC Press. ISBN 978-1-58488-673-0.

- Moffat, Alistair; Turpin, Andrew (2002). Compression and Coding Algorithms. Hamburg, Germany: Kluwer Academic Publishers. doi:10.1007/978-1-4615-0935-6. ISBN 978-0-7923-7668-2.

- Sedgewick, Robert; Wayne, Kevin (2011). Algorithms (4th ed.). Upper Saddle River, NJ: Addison-Wesley Professional. ISBN 978-0-321-57351-3. Condensed web version: ; book version .

- Stroustrup, Bjarne (2013). The C++ Programming Language (4th ed.). Upper Saddle River, NJ: Addison-Wesley Professional. ISBN 978-0-321-56384-2.